Prequisites

- Purpose: Popular streaming pattern with Apache Spark

- Apache Spark: structured streaming

- MariaDB, Redis

Glossary of Terms:

- Data ingestion: is the transportation of data from assorted sources to a storage medium where it can be accessed, used, and analyzed by an organization. The destination is typically a data warehouse, data mart, database, or a document store.

- Data marshalling: the process of transforming the memory representation of an object into a data format suitable for storage or transmission.[citation needed] It is typically used when data must be moved between different parts of a computer program or from one program to another.

- Data sinks: is a reservoir that accumulates and stores collected for an indefinite period. The process by which data sinks to the repository from other data resources is partially performed. by technical format conversion e.g. XML to JSON.

Characteristic

- Deal with unbounded dataset: don’t know how much data remains.

- One record/time: each will be inspected, trasnformed, and analyzed

- Computation on streams are real-time: Records are processed, insight generated, pushed to the next stage in real time

- Low-latency: from data ingestion to delivery of insights

Apache Spark Streaming

Capabilities: solve micro-batches (latency <100ms). Integration with data sources/sinks: S3, Kafka, Amazon Redshift, MongoDB, etc. State management. Event time processing. MLlib integrations.

APIs:

Streaming API: built on top of DStream which is the first legancy API build on Resilient Distributed Datasets (RDD), support Map-Reduce.

Structured streaming API: built on Spark SQL engine. Support datasets to process data as JVM object. and dataframe to process as rows and columns.

Streaming Analytics

What:

analyze data in real time and publish insight for consumption by real-time dashboards and actions. In some industries:

- Ecommerce: Analyze orders and consumer activities

- Healthcare: listen to patient vitals (vital indicator) from monitoring devices. Summized by windows to analyse patient status.

Pattern

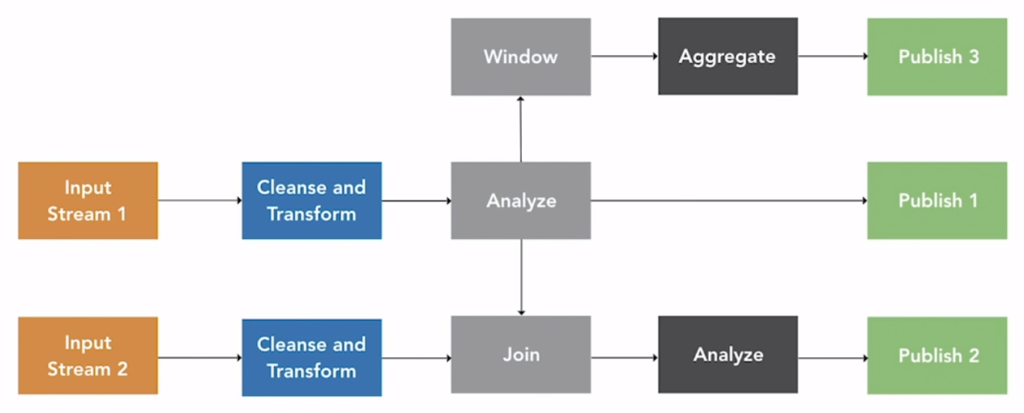

Input stream will undergo cleansing and transformation as required.

Data is then analysed record by record.

Results are pushed to output stream where it feeds a dashboard or db.

The window can be applied in regular intervals and summary can be published. Finally, multiple input streams can be combined using joint operations in real time, and joint data can be analyse to further insights

Use cases

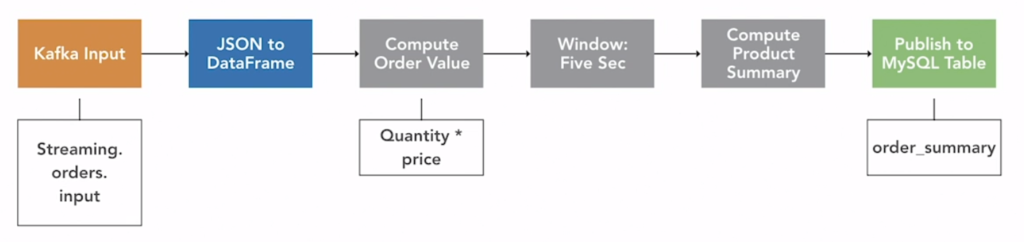

Use Kafka topic to analyze orders in real time every 5 seconds using multiplying quality and price.

Alert & Threasholds

Have an input Kafka topic, each message is csv content contains timestamp of when the exception occurred, level of error.